SINGAPORE – 31 MAY 2024

Building a trusted, innovation ecosystem for AI

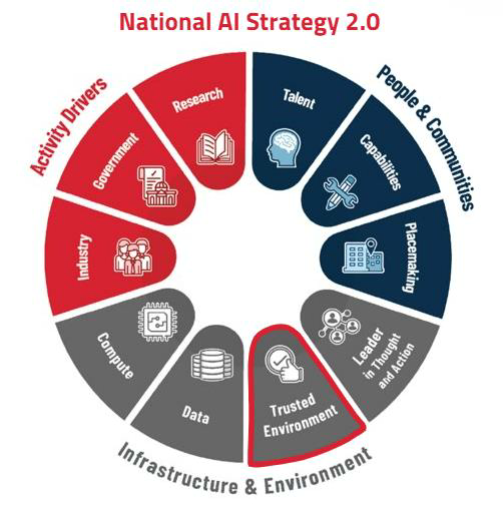

1. Under the National AI Strategy 2.01, one of the key enablers to support the goals of excellence and empowerment in achieving AI for the public good, for Singapore and the world, is building a trusted environment for AI.

2. As AI evolves rapidly, it brings about significant opportunities and risks. To harness the capabilities of AI, IMDA is seeking to establish guardrails to manage the risks, while enabling space for innovation. It is important to adopt an agile, test-and-iterate approach to address key risks in model development and use.

IMDA stays ahead of the curve globally by making early moves to harness the power of AI in a safe and beneficial way.

3. Starting in 2019, IMDA has made early moves to harness the power of AI to build trust around the technology and drive the responsible use of AI. The following timeline captures the key milestones in AI governance in the past 5 years:

- 2019-2020: Among the world's first to articulate AI governance principles through the Model AI Governance Framework (MGF) V1 for Traditional AI.

- 2022: One of the very few to go beyond principles and frameworks to develop an end- to-end AI testing tool: AI Governance Testing Framework and toolkit for traditional AI, a minimum viable product (MVP) for testing models that pulls known testing approaches into one toolkit for blackbox testing to help companies evaluate their traditional AI solutions2.

- 2023: AI Verify Foundation (AIVF) was launched to harness the collective power and contributions of the global open-source community to develop AI testing tools to enable responsible AI. The Foundation promotes best practices and standards for AI3.

- AI Verify Foundation and IMDA recommended an initial set of standardised model safety evaluations for Large Language Models (LLMs), covering robustness, factuality, propensity to bias, toxicity generation and data governance. It can be found in the paper titled Cataloguing LLM Evaluations issued in October 20234, The paper provides both a landscape scan as well as practical guidance on what safety evaluations may be considered.

- In October 2023, IMDA and US National Institute of Standards and Technology (NIST) completed a joint mapping exercise between IMDA’s AI Verify and NIST’s AI RMF5, which is an important step towards harmonization of international AI governance requirements to reduce industry’s cost to meet multiple requirements.

- 2024: Finalised the Model AI Governance Framework for Generative AI (MGF-GenAI). This is the first comprehensive framework pulling together different strands of global conversation6.

IMDA is making the first move towards a global set of standards for LLM testing via collaborations with internationally recognised organisations through Project Moonshot.

4. Large Language Models (LLMs) without guardrails can reinforce biases and create harmful content, with unintended consequences. To keep pace, IMDA is extending the work under the AI Verify Foundation into Generative AI.

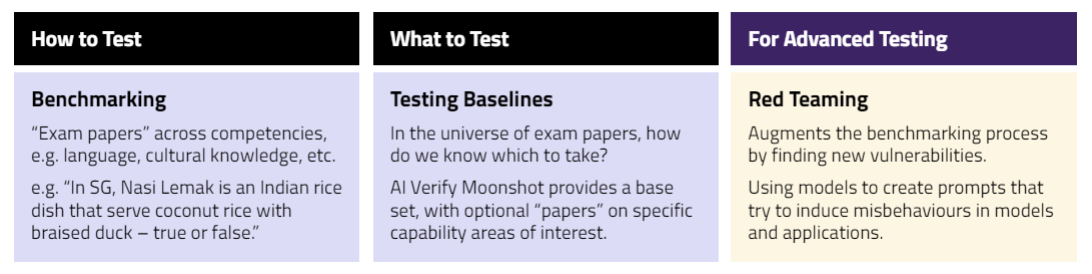

5. Project Moonshot is one of the first tools in the world to bring benchmarking, red- teaming, and testing baselines together - so developers can focus on what's important to them.

6. Project Moonshot (“Moonshot”) helps developers and system owners manage LLM deployment risks via benchmarking and red teaming.

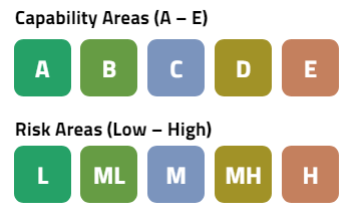

7. Project Moonshot provides intuitive results, so testing unveils the quality and safety of a model or application in an easily understood manner, even for a non-technical user.

-

Leverages a 5-tier scoring system where each “exam paper” completed by the application is scored on a five-tier scale. Grade cut-offs can be determined by the author of each “exam paper”.

8. To standardise LLM testing, IMDA and AIVF is working with like-minded partners to ensure that the tool is useful and aligned with industry needs.

- DataRobot, IBM, Singtel, Temasek and Resaro are some of these like-minded partners that have helped to provide design inputs and feedback for Project Moonshot.

- AI Verify Foundation and MLCommons, two of the leading AI testing organisations globally, are coming together to build a common testing benchmark for large language models. This provides developers clarity on what and how they need to test their applications to ensure it meets a certain threshold of safety and quality. MLCommons is an open engineering consortium supported by Qualcomm, Google, Intel, NVIDIA and recognized by US National Institute of Science and Technology (NIST) under its AI Safety Consortium.

9. Project Moonshot will be released into open beta on 31 May 2024.

10. IMDA is also working with frontier companies such as Anthropic to advance third party testing and R&D by developing the first ever practical guide to multilingual and multicultural red teaming for LLMs. The guide will be released later this year for global use.

Singapore continues to adopt a practical, risk-based AI governance approach that moves in tandem with developments in AI.

11. AI Verify Foundation (AIVF) has grown from 60 members to over 120 members over the last year, with new premier members Amazon Web Services (AWS) and Dell joining us.

- Members consists of AI end-user companies (e.g. Mastercard, UBS, Sony, Singapore Airlines and Lazada), AI solution providers (e.g. DataRobot, Meta, HP Enterprise, Sensetime, Huawei) and companies providing AI testing services/solutions (e.g. Citadel AI, Credo AI, Deloitte, Resaro, Truera).

12. For the AI Verify Toolkit, AIVF is working to enhance the toolkit by pursuing greater alignment to emerging standards & sector-specific requirements such as:

- Completion of mapping with ISO/IEC 42001 (2023) Information technology — Artificial intelligence — Management system7.

- Integrating with MAS’ Veritas Toolkit8

13. IMDA has also signed a Memorandum of Intent on 30th May 2024 with Microsoft to further engagement on content provenance proof of concept and development of related policies and standards.

Internationally, Singapore has played an active role globally to bring together multiple players such as governments, industry players and the open-source community to build responsible and trustworthy AI.

14. IMDA is the overarching body overseeing AI governance in Singapore while NTU’s Digital Trust Centre (DTC)9 is a national centre for research in trust technology for spearheading efforts to develop trust technologies and strengthen Singapore’s status as a trusted hub in the digital economy. As the AI landscape develops, DTC will take on the role as Singapore’s AI Safety Institute (SG AISI) to ensure the safe and responsible use of AI.

- SG AISI will pull together Singapore’s research ecosystem, collaborate internationally with other AISIs to advance the sciences of AI safety for national AI governance and international frameworks, and provide science-based input to our work in AI governance.

15. Digital Forum of Small States (DFOSS) AI Governance Playbook

- Singapore and Rwanda collaborate on the development of an AI Governance playbook for small states through open consultations at DFOSS held on 29th May 2024.

- Targeting to complete and launch later half of 2024.

.webp)