Be aware of scammers impersonating as IMDA officers and report any suspicious calls to the police. Please note that IMDA officers will never call you nor request for your personal information. For scam-related advice, please call the ScamShield Helpline at 1799 or go to www.ScamShield.gov.sg.

- Architects of SG's Digital Future

- Activities

- Activity Catalogue

- SSNLP 2025 and IMDA Technical Sharing Session

SSNLP 2025 and IMDA Technical Sharing Session

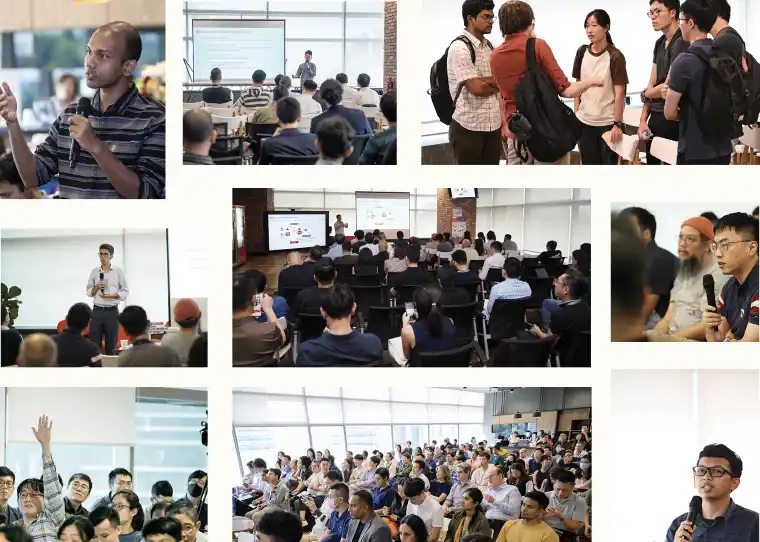

IMDA’s Technical Sharing Session is a regular platform where esteemed researchers are invited to present on the latest emerging tech topics.

SSNLP 2025 and IMDA Technical Sharing Session

IMDA’s Technical Sharing Session is a regular platform where esteemed researchers are invited to present on the latest emerging tech topics, and attendees can expect to:

- Gain invaluable insights from esteemed experts

- Network with like-minded technical experts

- Uncover opportunities for collaboration and innovation

Key takeway

Time and location

23 April 2025, 9:00am to 5:00pm

Summary

This session is held in conjunction with the annual SSNLP, a platform for academic and industry researchers in Singapore to present ongoing and upcoming work, fostering community building, idea exchange, and collaboration. SSNLP'25 is an excellent opportunity for faculties and students to gain international exposure and engage with leading experts in the field.

Featured guests

Nanyun Violet Peng

Associate Professor, UCLA

Faeze Brahman

Research Scientist, Allen Institute for AI (Ai2)

Mohit Bansal

Parker Distinguished Professor, UNC Chapel Hill

Junyang Lin

Research Scientist, Alibaba Qwen

Faeze Brahman

Research Scientist, Allen Institute for AI (Ai2)

Mohit Bansal

Parker Distinguished Professor, UNC Chapel Hill

Junyang Lin

Research Scientist, Alibaba Qwen

Speakers

Nanyun (Violet) Peng

Associate Professor, UCLA

Nanyun is an Associate Professor at the Computer Science Department of University of California, Los Angeles, and a Visiting Academics at the Amazon AGI org. She directs the PLUSLAB (Peng's Language Understanding and Synthesis Lab) at UCLA, with the vision to develop robust Natural Language Processing (NLP) techniques to lower communication barriers and make AI agents true companions for humans.

Central to her vision is the advancement of AI creativity, which she believes is crucial for fostering more inspiring and productive human-AI interactions. By equipping AI systems with creative capabilities, we enable them to engage in more engaging and contextually rich ways, and offer innovative solutions to complex problems.

To realize this research vision, her group's research encompasses controllable (creative) language generation, multi-modal foundation models, automatic evaluation of foundation models, and low-resource, multilingual natural language understanding (NLU). By integrating these diverse areas, they aim to create AI systems that not only understand and generate language with precision but also exhibit the creativity needed to engage in meaningful, contextually rich dialogues. Their ultimate goal is to empower AI to assist, collaborate, and inspire, thereby enhancing the quality of human life through innovative and accessible technological solutions.

Nanyun got her PhD in Computer Science at Johns Hopkins University, Center for Language and Speech Processing, after that, she spent three years at University of Southern California as a Research Assistant Professor at the Computer Science Department, and a Research Lead at the Information Sciences Institute.

Faeze Brahman

Research Scientist, Allen Institute for AI (Ai2)

Faeze is a research scientist at Allen Institute for AI (Ai2). Until recently, she was a post-doctoral researcher at Allen Institute for AI and University of Washington working with Yejin Choi. She did her PhD (2022) in Computer Science at the University of California, Santa Cruz, working with Snigdha Chaturvedi.

She holds a master’s degree (2018) in Computer Science and a master’s (2014) and bachelor’s (2012) degree in Electrical Engineering. Her research interests are in understanding language model’s capabilities and limitations in the wild under unseen or changing situations and developing efficient algorithms to address these limitations beyond just scaling. This also includes better design for collaborative strategies and HCI interfaces to combine the best of Human and AI abilities.

Other interests include building Human-centered AI system that are more reliable and safe to use in various contexts by better alignment techniques, as well as developing robust and meaningful evaluation frameworks to investigate the emergent bahaviors of LLMs that are challenging to measure.

Mohit Bansal

Parker Distinguished Professor, UNC Chapel Hill

Dr. Mohit Bansal is the John R. & Louise S. Parker Distinguished Professor and the Director of the MURGe-Lab (UNC-NLP Group) in the Computer Science department at the University of North Carolina (UNC) Chapel Hill. Prior to this, he was a research assistant professor (3-year endowed position) at TTI-Chicago.

He received his Ph.D. in 2013 from the University of California at Berkeley (where he was advised by Dan Klein) and his B.Tech. from the Indian Institute of Technology at Kanpur in 2008.

His research expertise is in natural language processing and multimodal machine learning, with a particular focus on multimodal generative models, grounded and embodied semantics, reasoning and planning agents, faithful language generation, and interpretable, efficient, and generalizable deep learning.

He is a AAAI Fellow and recipient of Presidential Early Career Award for Scientists and Engineers (PECASE), IIT Kanpur Young Alumnus Award, DARPA Director’s Fellowship, NSF CAREER Award, Google Focused Research Award, Microsoft Investigator Fellowship, Army Young Investigator Award (YIP), DARPA Young Faculty Award (YFA), and outstanding paper awards at ACL, CVPR, EACL, COLING, CoNLL, and TMLR. He has been a keynote speaker for the AACL 2023, CoNLL 2023, and INLG 2022 conferences. His service includes EMNLP and CoNLL Program Co-Chair, and ACL Executive Committee, ACM Doctoral Dissertation Award Committee, ACL Americas Sponsorship Co-Chair, and Associate/Action Editor for TACL, CL, IEEE/ACM TASLP, and CSL journals.

Junyang Lin

Research Scientist, Alibaba Qwen

Junyang is the tech lead of Qwen Team, Alibaba Group. He is responsible for building Qwen, the large language model and multimodal model series, and is in charge of the opensource of the models.

Previously, he did research in large scale pretraining and multimodal pretraining, and led the development of OFA, Chinese-CLIP, M6, etc.

Explore all

.webp)